The problems with impact factors are well known – I could give you a long list of things to read that explain why, but just start with this blog post from Stephen Curry and go from there.

I have a slide that I use in my talks that sums up one particular problem – that the impact factor (IF) of any given journal tells you absolutely nothing about any given article in that journal. For example, the current IF of Organometallics is just over 4, whereas Nature‘s is more than 10 times that at just over 41. But does that mean that every Nature paper is 10 times ‘better’ than every Organometallics paper? (Answer: of course not! – and how on Earth would you measure ‘better’ anyway?). It also doesn’t guarantee that a particular Nature paper will have received more citations than any given Organometallics paper (after all, a wide distribution of citations make up an IF). Considering the perverse incentives in science, however, I wonder how many people would rather have on their CV an Organometallics paper that has received 50 citations in a year instead of a Nature paper that has garnered only 10 in the same period of time?

Anyway, I digress. The slide I have looks at things from a different point of view. Wouldn’t it be interesting if you could take exactly the same paper and publish it at roughly the same time in a bunch of different journals? Take your fancy-metal-catalyzed-cross-coupling-based synthesis of tenurepleaseamycin and submit it to (and have it published in) Angewandte, JACS, Nature Chem, Science, JOC, Tet Lett and Doklady Chemistry and then sit back and see how the citations roll in. Of course, it’s the same paper – it’s not a better paper in one journal than another, so it will get cited roughly equally in all journals, right? Well, all you can really do is speculate, because if you did try to do exactly that you’d end up really annoying some chemistry-journal editors and you might not get the paper published anywhere (well, I can think of a few places that would probably still take it, but discretion is the better part of valour and all that).

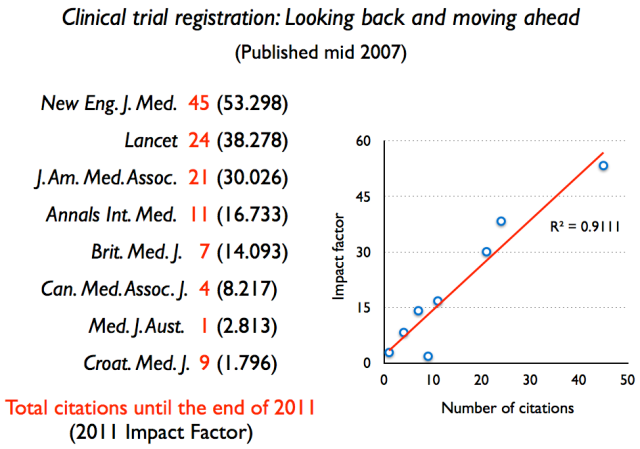

Well, never fear! The experiment has been done. Although it wasn’t an experiment, it wasn’t done for the purpose of comparing citations in different journals and it’s happened more than once. It turns out that in medical publishing, editorials/white papers occasionally get published in more than one journal. So, say hello to ‘Clinical Trial Registration — Looking Back and Moving Ahead‘. A few years back, I looked at the citations this paper had received in a range of different journals and the IFs of those journals – the slide from my talk with all of the data on is shown below.

There’s a pretty good correlation between the number of citations that this identical paper received in each journal with the IFs of those journals. Of course, perhaps more people read the New England Journal of Medicine than the Medical Journal of Australia and so a wider audience will likely mean a wider potential-citation pool. Whatever the reasons (and it’s not all that difficult to come up with others), the slide shows how silly it is to assume that the IF of a journal has any bearing on how good any particular paper in that journal is. As I have said before, the only way to figure out if a paper is any good is to actually read the damn thing – the name (or IF) of the journal in which a paper is published should never act as a proxy for how awesome (or not) a paper is.

So, as well as pointing out one specific flaw in the IF, when showing this slide it does allow me to make a joke about how the correlation would be even better if it wasn’t for some (imaginary, I hasten to add) Croatian citation ring… I apologize if I have offended any Croatian doctors who happen to be reading this… but the joke usually gets a laugh.

This is very interesting experiment. I did check it in pubmed and you are right this one paper with the same authors was reprinted in 9 different journals in 2007.

Now, the question is a “reputation”? If you tell me how to calculate or determine reputation of anything, including journals, then I will agree with you regarding disregarding IF.

posted by David Usharauli

I think that this reflects readership is a strong, and testable hypotheses. Print magazines were, for a long time, required to print their print circulation figures once a year. Should be possible to find that data. For instance, Nature’s print circulation is about 53,000: https://web.archive.org/web/20110513233735/http://www.nature.com/advertising/resources/pdf/2010naturemediakit.pdf

And here’s the analysis of journal circulation and citations: http://neurodojo.blogspot.com/2016/01/journal-circulation-and-citations.html

Stuart, this is great. I have been thinking about this for a while, but couldn’t think of a way to test the idea that citations are linked to prestige. It explains why journal level metrics are pretty static over time despite the papers themselves being ever-changing.

Pingback: Links 2/22/16 | Mike the Mad Biologist

Does the IF correlate with a journal’s circulation? I know that more people subscribe to something like Science or Nature than more specialized journals. I’m sure every field has a few must-read journals with intermediate circulation as well.

A strong correlation between IF and circulation suggests that scientists cite papers they encounter in their routine scan of the literature not as the result of a systematic search.

See the comment from Zen Faulkes above…

Pingback: Addicted to the brand: The hypocrisy of a publishing academic | Symptoms Of The Universe

I’ve seen a few papers on this. This found similar results to yours, albeit slightly weaker:

Perneger TV. 2010. Citation analysis of identical consensus statements revealed journal-related bias. Journal of clinical epidemiology 63:660-664. doi:10.1016/j.jclinepi.2009.09.012

The trend held up over a sample of thousands of duplicate papers:

Larivière V, and Gingras Y. 2010. The impact factor’s Matthew Effect: A natural experiment in bibliometrics. Journal of the American Society for Information Science and Technology 61:424-427. doi:10.1002/asi.21232

Pingback: The Relative Citation Ratio: It won’t do your laundry, but can it exorcise the journal impact factor? | To infinity, and beyond!

Pingback: Egregious citation statistic abuse | The Grumpy Geophysicist

Hi, this is a very nice article.

However, similar slides are used by editors ans evaluators to validate the use of IF to judge the ‘excellence’ of a published article. Indeed, this data shows that a paper published in a journal with a hight IF will be more cited than in a journal with low IF. ‘Therefore ‘, IF is a good indicator for a given article….☹️

Pingback: CiteScore: Elsevier’s new metrics for impact | The paper is open for discussion

I think what the graph really shows is that article-level metrics (i.e. how many times has a paper been cited) do not necessarily reflect the intrinsic value of that paper, but instead are the consequence of many other factors. Saying “papers in high-IF journals get cited more (on average) than papers in lower-IF journals; therefore, the high-IF papers are ‘better'” requires the assumption that papers with more citations are ‘better’. There’s no compelling evidence for this, and in fact much evidence that the most valued papers are often not the most highly cited (e.g. https://blogs.lse.ac.uk/impactofsocialsciences/2018/05/14/the-academic-papers-researchers-regard-as-significant-are-not-those-that-are-highly-cited/)

Pingback: Shenanigans with Impact Factors: curious case of Acta Crystallographica A